Understanding the Difference Between a Data Lake and a Data Swamp in Electronics Manufacturing

In the vast realm of data management, two terms often emerge (especially given the adoption rate of AI): data lake and data swamp. While both involve storing and processing large volumes of data, they represent contrasting approaches and outcomes. In this blog, Cogiscan dives into the differences between a lake and a swamp, exploring their definitions, characteristics, benefits, and pitfalls.

Defining the Data Lake

SOURCE: Cogiscan blog

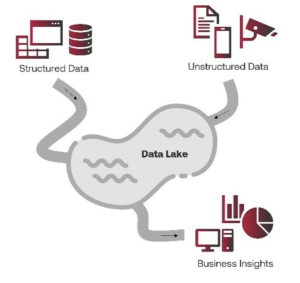

A data lake is a centralized repository that allows organizations to store structured, semi-structured, and unstructured data at any scale. In PCBA manufacturing, this could refer to machine data – from simple events like machine states all the way to more complex measurement data from an AOI including images, sensor-based data, as well as any other kind of enterprise system data.

Unlike traditional data warehouses, which require upfront data modeling and predefined schema, data lakes embrace a schema-on-read approach. This means that data is ingested in its raw form, preserving its original structure, and allowing flexibility for analysis and exploration later. It’s particularly the exploration later that’s garnered the favor of so many electronics manufacturers.

Key Characteristics of a Data Lake

- Raw and Diverse Data: A data lake accommodates a wide variety of data types, including machine data, sensor data, test pictures, text files, and more. It eliminates data silos and enables data integration from multiple sources, which is crucial for any digital factory.

- Scalability and Cost-effectiveness: Data lakes are typically built on scalable and distributed systems such as cloud-based platforms. They provide storage solutions by leveraging commodity hardware and pay-as-you-go cloud services.

- Agility and Flexibility: With a data lake, organizations can store vast amounts of data without schema definitions. This enables agile data exploration, ad-hoc analysis, and the ability to ask new questions as data needs evolve. In our industry, this means that you could perform in-depth analysis using your favorite BI tool without having to embark on a batch process.

Understanding the Data Swamp

Key Characteristics of a Data Swamp

- Lack of Data Governance: Data governance involves defining policies, standards, and procedures for data management. In a data swamp, data governance is either nonexistent or poorly implemented, resulting in data inconsistencies, duplication, and inaccuracies.

- Chaotic and Unorganized Data: In a data swamp, data is ingested without proper metadata management, making it difficult to discover, understand, and utilize effectively. Data is often scattered, unclassified, and lacks documentation, leading to confusion and inefficiency.

- Limited Data Accessibility and Trust: Due to the absence of governance and organization, users may lack confidence in the data quality and reliability. This hinders data-driven decision-making and reduces the overall value of the data lake.

Why is this so crucial for PCBA manufacturers?

Having a well-governed data lake is the foundation for many digitalization initiatives. Among others, we can think about:

- Quality Control: By storing and analyzing data from various stages of the manufacturing process, such as component sourcing, assembly, testing, and inspection, manufacturers can identify patterns, detect anomalies, and make data-driven decisions to improve product quality. Learn more!

- Traceability and Root Cause Analysis: Data lakes facilitate comprehensive traceability within the manufacturing process. By capturing data related to component suppliers, production line parameters, test results, and customer feedback, PCBA manufacturers can quickly identify the root causes of issues, address them promptly, and implement preventive measures to avoid similar problems in the future. Learn more!

- Real-time Monitoring and Predictive Maintenance: Leveraging data lake capabilities, PCBA manufacturers can establish real-time monitoring systems that capture data from sensors and equipment on the production line. Analyzing this data can help identify maintenance needs, predict equipment failures, and optimize production processes to minimize downtime and increase operational efficiency. Learn more!

- More than anything else, AI (Artificial Intelligence) needs a well-defined structure, often refers at as “flat data structure”. In essence, any AI project heavily relies on data, and structure plays a crucial role. Regardless of how long data has been collected, without a well-defined structure and clear intentions from the outset, starting from scratch might be necessary. In other words, data accumulation alone is not enough for an AI project to succeed. To avoid your data lake into turning into a useless swamp, it is imperative to invest time and effort into organizing and structuring the data right from the beginning. Without a solid foundation, the path to a successful AI project becomes uncertain and potentially requires restarting the entire process. If you want to learn more about this topic, we encourage to read our other article Why do 85% of AI projects fail…and how can you make yours have a positive ROI.

Conclusion